Circumnavigating the Planetary Rover Communications Delay Between Mars and Earth; An Artificial Intelligence Approach

Abstract

This Thesis conducted research on how different Artificial Intelligence techniques have been used to help circumnavigate the communication delay between Mars and Earth, to allow for more planetary rover autonomy and self-learning. This Thesis first gives a brief introduction to the current communication process NASA uses to communicate with space vehicles, which includes the Perseverance rover. It then looked at the current technologies on board the Perseverance mission, and how they will be used to explore Mars. The research explored some simulations conducted with different Artificial Intelligence techniques that could be applied to allow the rover to operate more autonomously on the Martian terrain. Some of the Artificial Intelligence techniques discussed, included examples of the chosen algorithms/approaches being Machine Learning, Reinforcement Learning, Artificial Neural Network, and then broken down into more granular approaches (i.e., Monte Carlo, search, binary classification, Stochastic optimization, and others).

The focus was to find theoretical techniques that could limit the human intervention needed for the planetary rovers and helicopter to operate. These vehicles would need to understand their surroundings on the Martian terrain and plan the most optimal path with the least cost, that would also not cause any damage. This Thesis identified techniques that have the potential to work well and how this has expanded the capabilities of less-needed human intervention. This in turn, could help to work around the challenges presented between Mars and Earth communication delays. Therefore, allowing planetary vehicles the chance to operate without the need of constant human involvement. This Thesis concludes with the results of the discussed simulations and how continued research is needed to better improve the capabilities of full autonomy and self-learning.

Introduction

For centuries, mankind has looked to the stars and pondered the philosophical question, are we alone in the universe? This question has sparked both curiosity and ingenuity that has paved the way for explorers and the most notably, the great space race. With more and more private companies popping up such as, SpaceX and Blue Origin, reaching up has become a reality. It went from what if, to when and how. Although we have made tremendous developments in technology, there are some areas that are moving at a much faster rate than others. Since the introduction of planetary space rovers, companies like NASA have used them to explore extraterrestrial objects; one being Mars.

Although planetary space rovers have solved certain aspects of a given problem, (i.e., how to study a planet without the accompaniment of humans), it still faces many other shortcomings. One of these problems being the communication delays between celestial objects. Moreover, NASA currently faces communication delays between (most recently) Mars and Earth. Because of this, using a human team to pilot these planetary vehicles in real-time, has become unrealistic. As such, they had to find a work-around for the need of a human pilot. This is where the use of Artificial Intelligence has come into play; allowing these vehicles to operate mostly autonomous and become self-learning. This Thesis focused its research mostly on the Perseverance Mars mission and how NASA has attempted to implement some Artificial Intelligence techniques to get around the communications delay between their Perseverance rover and Helicopter Ingenuity. Solving an issue like this requires major ingenuity, since there is no one single solution.

One of the main solutions that this Thesis presented was the use of various Artificial Intelligence techniques to be used throughout different avenues that touch on this topic. More specifically, utilizing techniques that encompass areas such as, Machine Learning, Transfer Learning, and Deep Learning (to name a few), as well as some of the algorithms covered by these approaches. To realize space exploration missions, communication plays an important role [1]. This thesis explored some of the current restraints between Mars and Earth communications, as this is a crucial area of study and for the space industry to fully thrive and expand to massive exploration, there are tremendous challenges that still need to be solved [1].

One of the main issues with using planetary space rovers to collect data from other extraterrestrial bodies or even respond to navigational instructions (given by a human pilot based on Earth), is the amount of time it takes to send data from Mars to Earth and then Earth to Mars. There has been great advancement in communication technologies, however, to use the existing communication and computational technologies for space exploration, it needs huge progress in adopting and providing connectivity and coverage in facilitating the exploration missions [1]. To be specific, current communication between Mars and Earth goes through revolving satellites, which gives an advantage for continued communication as the two planets revolve around the sun. The main challenge of communication between remote destinations is the substantial delay due to the distance from the Earth [1].

In addition to the communication delays, attempting to analyze the terrain of a planet like Mars for instance, becomes challenging if attempting to use a human pilot here on earth. As an example, a human pilot would not be able to receive data fast enough, if there was something like rough terrain that could damage the rover’s tires, or even rough weather during a helicopter flight. This has led to NASA creating a communication relay between (in this example) the Perseverance rover and Ingenuity helicopter, which would allow the rover to make its own assessment of the terrain.

For the scope of this thesis, the Perseverance mission (which included the rover and the Ingenuity helicopter) was used as a reference point, for the specific technologies they were equipped with and the Artificial Intelligence techniques that were being considered. For example, there were a total of 16 cameras in the Perseverance engineering imaging system, including 9 cameras for surface operations and 7 cameras for EDL documentation [2]. This thesis will explore more about these specifications later and how they are being used. Covering the specifics of the hardware on board, helped to further understand how these were able to assist with the various learning techniques applied to different parts of the mission.

To really understand the methodology used for the Mars 2020 Perseverance mission, this Thesis researched some of the history of the development. First, it was best to look at some issues with other past rovers from prior missions, some of their pitfalls, and how those challenges were improved upon for this mission, as well as future missions; this is where research towards some of the resulting Artificial Intelligence techniques are being applied. This Thesis is organized as follows, Chapter II provides some background and literature about the topic, Chapter III describes the methodologies used in some of the research presented, Chapter IV presents the results of those research experiments, and Chapter V provides concluding remarks.

Background and Literature Review

Currently, humans are trying to explore the Universe and trying to understand if we are truly alone. This presents us with many challenges with answering this question. We live in a world where our minds allow us to imagine something and make it a reality. Therefore, humans traveling to other planets will one day become possible. This might not answer the question right away, but it will allow us to better understand how life has and will continue to evolve. Since our current technologies have not evolved to the point where it would allow us to physically travel to other planets, we must use machines to explore on our behalf. These machines, although very impressive, tend to require human intervention, at least from many past iterations. So, in this instance, scientists and engineers decided to try to bridge the gap that matters more the further the distance from Earth, by improving their software along with their hardware. The hardware is only as good as it’s software, just like human bodies are only as good as our brains. Therefore, it is safe to theorize that by utilizing different Artificial Intelligence disciplines, has the potential to become a viable solution. By sheer observation, mimicking endeavors here on Earth, such as self-driving cars and unmanned aerial vehicles (UAVs)/drones (to name a few), proves that this has become more of a realistic approach to this exploration path.

Currently, Earth utilizes satellites that are strategically placed around the Earth and other areas throughout our neighboring solar system for communications. Since these become further apart the further out you travel away from the Earth, communications start to experience longer delays. Like attempting to access a website and having load time delays (especially during the dial-up area). Because Earth and Mars (in this instance) are approximately (according to NASA) 123.76 million miles away, the amount of time it takes data to travel to and from is quite substantial.

Figure 1. NASA's Deep Space Network (DSN) [3].

To get a better understanding of how communication is done between Earth and space missions, we can look at the NASA Deep Space Network (DSN); depicted in Figure 1 are two of the antennas. NASA Deep Space Network creates the communication links between Earth and space/Mars missions [3]. The DSN consists of three deep-space communications facilities strategically placed around the Earth, approximately 120 degrees apart. The locations are Goldstone in California, Madrid, Spain, and Canberra, Australia. These locations were chosen because they provide continuous observations of any spacecraft as the Earth rotates on its axis.

The DSN antennas are relatively large, measuring around 111 feet by 228 feet. Moreover, the bigger they are, the further they reach outer space (millions of miles) and the more information they can send/receive. The Mars Science Laboratory, while in its cruise stage configuration, communicates through low and medium-gain antennas [3]. As an example, while the Mars rover Curiosity is roving on the planet, it is communicating with the Mars Reconnaissance Orbiter via its UHF antenna and to the DSN on Earth by way of its high-gain antenna [3]. The DSN network currently communicates with almost all spacecrafts flying throughout our solar system, which includes anything from spacecrafts that are observing the sun, to asteroids and other planets, etc., and as a result makes the DSN very busy trying to track all of these. Therefore, the Mars Science Lab spacecraft must share time with the DSN antennas. A sophisticated scheduling system with a team of hundreds of negotiators around the world ensures that each mission's priorities are met.

During important missions, such as a Mars landing, multiple DSN antennas on Earth, along with antennas found on the Mars Reconnaissance Orbiter, track the signals from the landing spacecraft to help mitigate any loss of communication during this critical time. While the spacecraft is approaching the landing phase of its mission, the Mars Science Lab utilizes the Multiple Spacecraft Per Aperture (MSPA) capability, which allows a single antenna to receive up to four spacecraft signals or downlink at a time, in conjunction with the Mars Odyssey and Mars Reconnaissance Orbiter’s spacecraft’s relay capabilities. Using the Curiosity rover in this instance, it will send its commands early in the Martian (sol) day for about 30 minutes providing the instructions for its activity. The rover’s downlink sessions (sending information from the rover to Earth) are about twice a day and around 15 minutes each per sol, per relay orbiters (Mars Odyssey and Reconnaissance Orbiter). These consist of two sessions, overnight and then late afternoon. MSPA only allows one spacecraft at a time to have the uplink. This allows the rover to essentially communicate with Earth, as the Orbiters consistently have Earth in their direct path, when the rover does not.

The data rate that Curiosity rover can send directly to Earth, without the relay to the orbiters, varies from about 500 to 32,000 bits per second, which is about half the speed of a home modem. The data rate transfer to the orbiter relays can be as high as 2 million bits per second for the Reconnaissance and 128,000 to 256,000 bits per second to the Odyssey, around 4 to 8 times faster than that of a home modem. An orbiter passes over the area of the where the rover is located per sol and can communicate for about 8 minutes at a time. During this communication window, anywhere from 100 to 250 megabits of data can be transmitted to the orbiter. At max, the same 250 megabits would take up to 20 hours to transfer directly to Earth from the rover itself. Due to power constraints, Curiosity rover is also limited to only a few hours’ worth of data transfer directly to Earth. Moreover, Mars rotates on its axis and will therefore turn its back on Earth taking the Curiosity rover with it. Not only can the orbiters see Earth longer per sol than Curiosity, but they also have much more power and bigger antennas.

Due to the shear complexity of the amount of time and data needed to plan rover routes, the need for self-determining and self-driving rover exploration come into play. Artificial Intelligence as a whole, is not a one stop solution, but an addition to a solution to circumnavigating the communications delay. Exploring some of the different techniques and disciplines that Artificial Intelligence can bring to the table is crucial if we want to find those answers to our life-long question. To get a better understanding of the current ground technologies, this Thesis also explored the technologies onboard both the Perseverance rover and the Ingenuity Helicopter.

Perseverance Mission

NASA’s Mars 2020 Perseverance Mission, launched on July 30, 2020, 7:50 am EDT, landed February 18, 2021, at 3:55 pm EDT, with a mission duration of at least one Mars’ year or about 687 Earth days [4]. The Perseverance rover (the successor to the Curiosity rover) was designed to better understand the geology of Mars and seek signs of ancient life [5]. Besides collecting and storing rock and soil samples that will one day be sent to Earth, it will also test and demonstrate new technologies for future missions. The Perseverance rover was tasked with key scientific objectives that support the Mars Exploration Program's (MEP) science goals. “The goal of the Mars Exploration Program is to explore Mars and to provide a continuous flow of scientific information and discovery through a carefully selected series of robotic orbiters, landers and mobile laboratories interconnected by a high-bandwidth Mars/Earth communications network”. Looking for habitability, by identifying past environments capable of supporting microbial life. Seeking biosignatures, by seeking signs of possible past microbial life in those habitable environments, particularly in special rocks known to preserve signs of life over time. Caching samples, by collecting core rock and soil samples and store them on the Martian surface. Preparing for humans, by testing oxygen production from the Martian atmosphere [4].

Key Hardware

Figure 2. Mars Perseverance rover [7].

Figure 3: Mars Perseverance On board Technologies [8][9][10][11][12][13][14].

On board the Perseverance rover seen in Figure 2, are seven instruments to help test science and new technologies, which include: Mastcam-Z [8], Figure 3, SuperCam [9], PIXL [10], SHERLOC [11], MOXIE [12], MEDA [13], and RIMFAX [14] [5]. Mastcam-Z is an advanced camera system, with the capability of panoramic and stereoscopic imaging, as well as the ability to zoom. This will also determine surface mineralogy, which will help assist with rover operations. SuperCam similarly to Mastcam, can provide imaging and mineralogy of the surface, however from a distance. This also has the added benefit of providing chemical composition. The Planetary Instrument for X-Ray Lithochemistry (PIXL), which will be used to focus on a specific area at a micro scale (sub-millimeter). Scanning Habitable Environments with Raman & Luminescence for Organics and Chemicals (SHERLOC) is a spectrometer that provides fine-scale imaging and uses an ultraviolet laser to map mineralogy and organic compounds. SHERLOC includes a high-resolution color camera for microscopic imaging of the surface. The Mars Oxygen In-Situ Resource Utilization Experiment (MOXIE) is an experimental instrument that will convert carbon dioxide from the Martian atmosphere to oxygen. Mars Environmental Dynamics Analyzer (MEDA) is a set of sensors that will provide weather measurements (temperature, winds speed and direction, pressure, relative humidity, and dust shape and size). Lastly, the Radar Imager for Mars Subsurface Experiment (RIMFAX) is a radar that can penetrate the surface of Mars, providing centimeter-scale resolution for the subsurface geologic structure.

Additional technology shown in Figure 2 includes: the Rover Sample Caching System, Coring Drill, and the Laser Retroreflector (LaRA). The sample and caching system will collect core samples from the surface, seal them in tubes and then leave them for future missions to retrieve and bring back to earth [15]. The Coring Drill will be used to core some of the surface to collect surface rock samples [16]. The Laser Retroreflector will be used to measure the time of flight it takes for the laser pulse to reach the same direction it originally came from, which allows for accurate recording of its location [17].

Technology

Some of the technologies that will be tested during this mission are, an autopilot, called Terrain Relative Navigation. This, paired with a set of sensors that gather data during the landing and an autonomous navigation system that will allow the rover to drive faster in tough terrain.

Mars Helicopter Ingenuity

In addition to the hardware and technologies mentioned above, the Perseverance mission also includes Ingenuity, the Mars Helicopter [24]. Ingenuity, attached to the belly of the Perseverance rover, is a small autonomous aircraft, with an experimental mission, that works independent of the rover. The main mission of Ingenuity is to test flight within the thin Martian air that could potentially be used as future robotic scouts. Because Ingenuity is just a demonstration of the technology, it was not designed as support for this mission. However, the theoretical approach that can be learned from this will be discussed in some research experiments later in this Thesis.

From the above-mentioned background on some of the limitations presented by the time delay in communications, this Thesis aimed to see how some of the techniques learned within this degree could be applied within this field. It is important to understand that this is purely theoretical and may never be applied to these missions. In the next section, this Thesis will present how some of this research was conducted focusing most specifically on the Perseverance mission, with some mentions of the Curiosity rover.

Methodology

In this chapter, this Thesis looked at some of the work already done within this area of research. This included currently applied Artificial Intelligence techniques, as well as some theorized techniques. Some work done in [19] showed some Machine Learning techniques with enhanced heuristics for terrain safety enhancing NASA’s Perseverance rover baseline ENav software. The Enhanced AutoNav software, also known as ENav for short, is the baseline navigation software used for NASA’s Perseverance rover. The ENav software sorts a list of potential paths for the rover to traverse, and then uses the Approximate Clearance Evaluation (ACE for short) algorithm, to evaluate whether the most highly ranked paths are indeed safe [19]. Although ACE plays a crucial role in the safety of Perseverance, it is computationally expensive. The reason for this, is that if ACE finds the promising path to be untraversable, then ENav must continue to search the list for the most optimal path, until it is chosen to be traversable. This paper’s experiment provided two heuristics that made the ranking of the paths more efficient, prior to the ACE algorithm evaluation.

The ACE algorithm

Figure 4. Height Map (Top Left), Gradient Map (Top Right), Rover Kernel (Bottom Left), Cost Map (Bottom Right) [19].

Figure 4 (Top Left), given the height map (the red square represents the scale of the rover itself), Sober Operators can be used to create a gradient or gradient squared map. Figure 4 (Top Right), the gradient map can be convolved with a kernel representing the orientation-agnostic footer of the rover to form a cost map in Figure 4 (Bottom Left), which estimates the cost to traverse a location within the map.

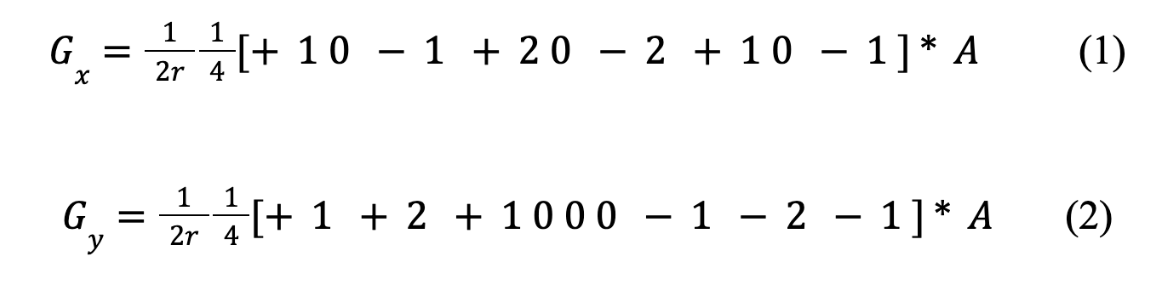

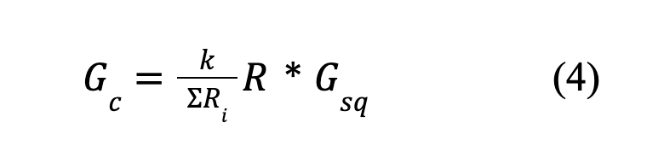

The first heuristic (hand-designed) used Sobel operators (Sobel operator, sometimes referred to as Sobel-Feldman operator or Sobel filter is used in image processing and computer vision [18]), and convolution to incorporate the cost of traversing high-gradient terrain [19]. The ACE algorithm contemplates the terrain’s irregularity under the Perseverance rover’s wheels. The Gradient Convolution heuristic was designed to help in this area, by assessing the terrain’s roughness at the specific points that the Perseverance rover’s wheels might be. To get a better picture on how this heuristic would work, the research looked at items from Figure 3. Step 1, (shown in the below equations) convolve the height map in Figure 3 (top left), with normalized 3x3 Sobel operators to find the local x and y gradient. A, being the height map itself, the width of one square cell in said heightmap being r, and convolution operator being *.

Step 2 is to find the squared gradient map (Figure 3 – top right) as:

Step 3, convolve the squared gradient map with a kernel representing the heading-agnostic footprint of the rover (Figure 3 – bottom right). The footprint is an annulus, with an outer radius corresponding to the furthest extent of any wheel and an inner radius corresponding to the closest any wheel gets to the pivot point. If elements of the kernel are within the annulus, they have a value of 1, otherwise 0. Normalize by dividing by the sum of the non-zero elements in the footprint kernel, and multiply by a parameterized cost factor [19].

In this, R is the rover kernel, and k is the cost factor and yields the gradient convolution cost map in Figure 3 (bottom right). Since Convolution and Sobel operators are commonly used in computer vision, the need for these methods to be computed efficiently is becoming more important. During the initial terrain analyzing step in the ENav planning cycle, the gradient convolution cost is calculated for each cell in the cost map and included in the overall cost of that cell. Therefore, the heuristics contribute not only to the cost of the terrain traversal along each path, but also the Dijkstra-based estimate of cost from the end of each path to the overall goal.

The second heuristic (machine-learned) used a Machine Learning model to more accurately predict areas that were marked as untraversable by ACE, based on the height map in Figure 4 (top left). In contrast to the Gradient Convolution heuristic, this method was automatically encoded using a data-driven framework, by developing a Deep Convolutional Neural Network (DCNN) based model, which could directly predict the outcome of the ACE algorithm for a given terrain height map. In the experiments, they used physics-based simulations to collect the training data for the Machine Learning model and ran Monte Carlo trails to quantify the navigational performance across the variety of different terrains with numerous slope rock distributions [19]. When they compared their added heuristics to ENav’s baseline performance, it showed a significant reduction in ACE evaluations and average computation time per planning cycle, increased path efficiency, and maintained or even in some cases, improved the rate of successful traverses. Using this method, ENav could more optimally sort its potential paths and reduce the average number of ACE evaluations required until finding a safe path.

This experiment formulated the problem as a Supervised-Learning based classification, where the DCNN model was based on a modified encoder-decoder style U-Net architecture and implemented using a TensorFlow framework [19]. The encoder consists of a sequence of convolutional layers that down-samples the input to a low-dimensional feature map. With an additional decoder that consists of up-sampling layers with convolutions that then take said feature map and increase the resolution to the original input. There is additionally a series of residual connections from the encoder to the decoder feature maps that help to restore the original high-resolution details that are lost during that initial down-sampling. Which also prevents vanishing gradients during training. The input to this model is a height map and the output is the ACE map. While the output is encoded to have a multi-channel representation, where each channel represents a cardinal heading angle for the rover. In the experiments that were conducted in [19], they found a discretization of 8 heading angles (at 45-degree intervals) to be sufficient. Sigmoid activation was applied to each channel to give a value in the range [0, 1] corresponding to the probability of a cell being infinite ACE cost or not. From there, a Monte Carlo simulation was used to gather training data from the baseline ENav algorithm including 1,500 terrains, randomly sampling 8 height maps from each path. For each of the cells in each of the sampled height maps, the ACE algorithm was run with the 8 fixed rover heading values, which resulted in the ACE map. There were a total of 12,000 total height maps, with a training set of 9,500 and a validation of 2,500. This learned heuristic model achieved a 97.8% training accuracy with a 95.3% validation accuracy. The result of this predication is a probability of ACE returning a safety violation, which is different from what the ACE algorithm is intended for. To predict the actual ACE costs, it becomes a dual problem of classification and regression, which the above-mentioned segmentation alone couldn’t predict.

For the ENav algorithm integration process of this model, the height maps were passed to a TensorFlow process, which then returned an ACE prediction map to ENav. When ENav does the initial path ranking step, the ACE map is sampled at regular intervals along those paths, evenly evaluating the predictions for the two fixed headings closest to the current heading. Then a cost factor is multiplied to the ACE prediction and is then added to the accumulated cost of each path. The average ACE map value is included in the cost map and is used for the Dijkstra-based estimate of cost from the end of each oath to the overall goal. The sorted list is still evaluated by the original ACE algorithm and therefore allows for this experiment to leverage the benefits of Machine Learning while maintaining the same safety guarantees.

Figure 5. A view of the ENav simulation environment [19].

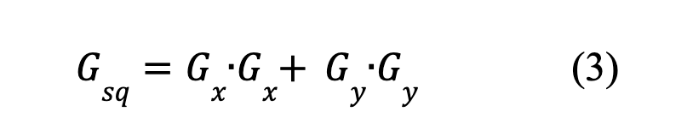

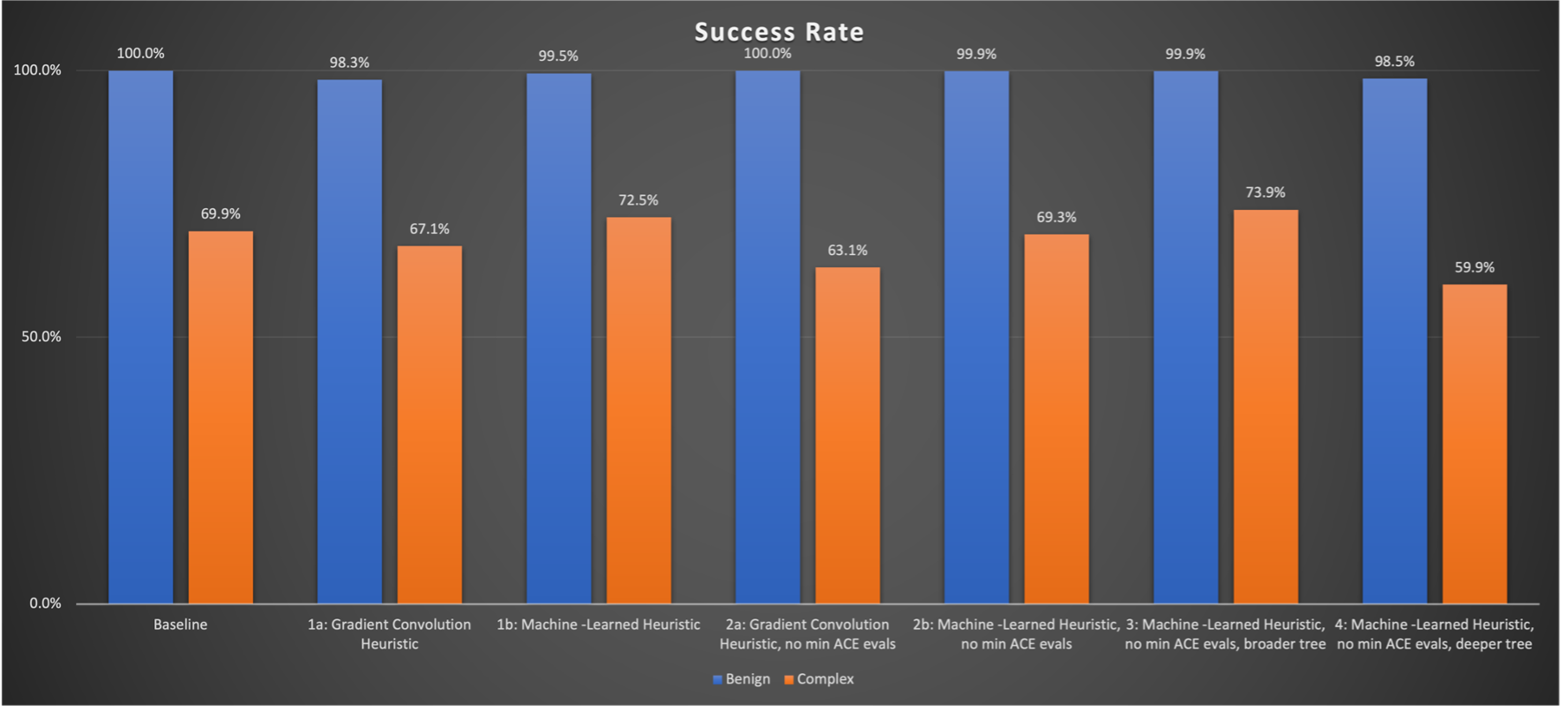

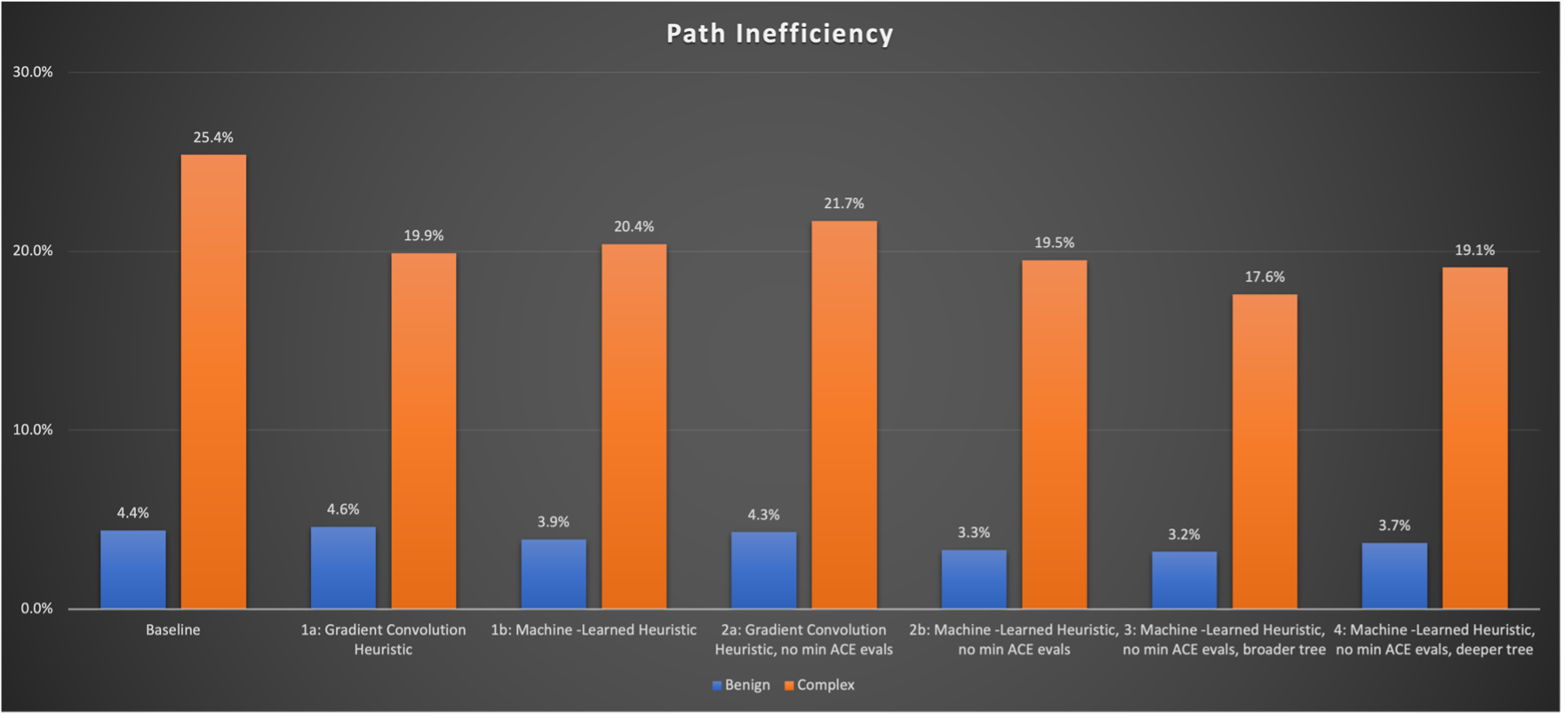

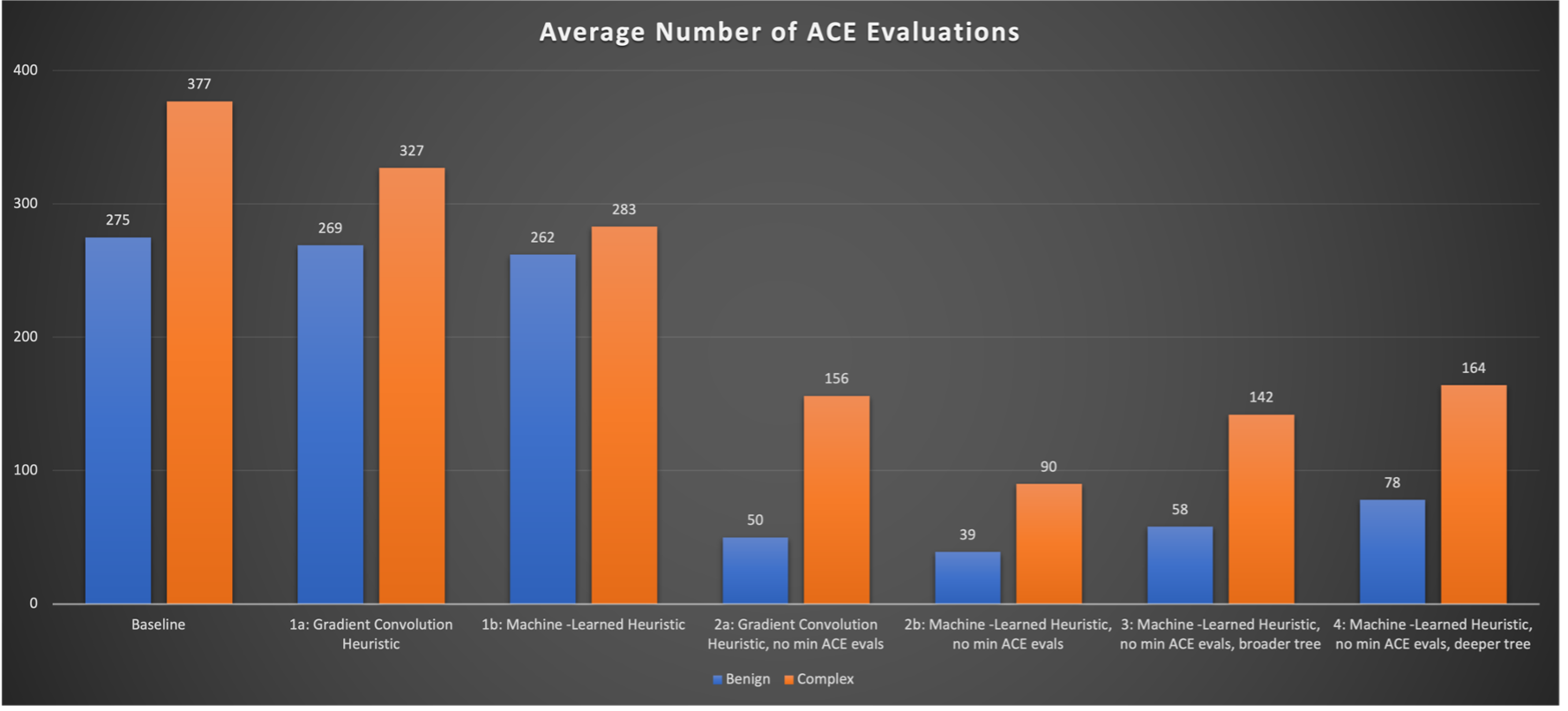

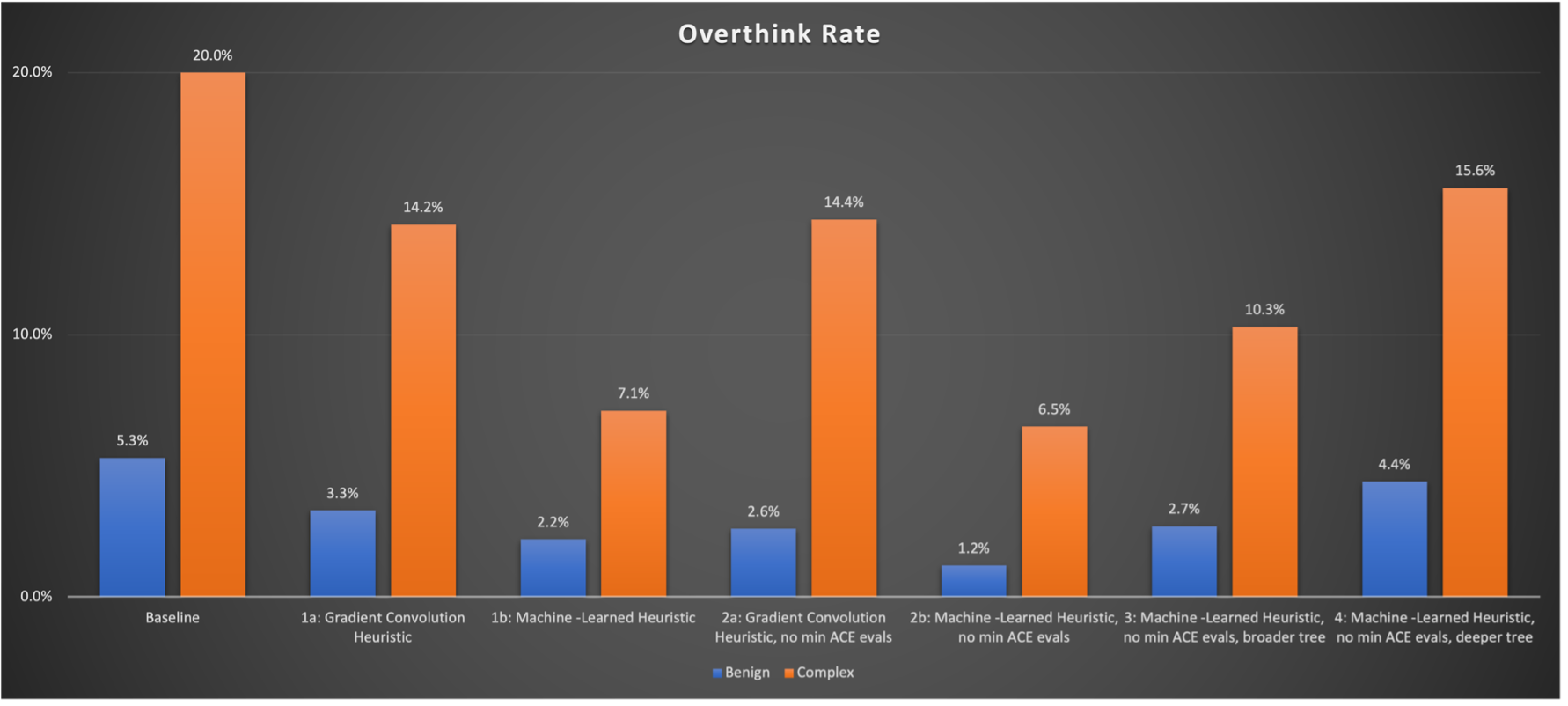

As seen in Figure 4, the green-yellow terrain shows how the Gradient Convolution heuristic, developed in [19], has assessed the cost of traversing the terrain (yellow regions are higher cost) and steers the rover toward safer regions. Figures 6, 7, 8, and 9, show comparisons across multiple experiments with the different algorithms, using both Benign and Complex data samples. Figure 6 shows the Success Rate percentages, Figure 7 shows the Path Inefficiency percentages, Figure 8 shows the Average Number of ACE Evaluations, and Figure 9 shows the Overthink Rate. Both heuristics were integrated into the baseline ENav algorithm and used to sort the Perseverance rover’s path more effectively prior to the ACE evaluation step. Proving how by incorporating the Machine Learning classifier into the ranking process and still checking paths for safety with ACE, safety doesn’t have to be sacrificed.

Figure 6. Success Rate measured across multiple experiments.

Figure 7. Path Inefficiency measured across multiple experiments.

Figure 8. Average Number of ACE Evaluations measured across multiple experiments.

Figure 9. Overthink Rate measured across multiple experiments.

Where to Map?

The next research done in [20] shows an experiment conducted which included the Mars Ingenuity helicopter. In which, using the onboard technologies would help identify the most optimal paths that the Perseverance rover could take. Although the Mars Ingenuity Helicopter was sent with the intent to demonstrate the flying capabilities on Mars, Ingenuity could potentially allow itself to become additional eyes for the Perseverance rover and even potentially future manned missions. To get a good understanding as to how this could be possible, this Thesis will look at some research done in [20]. Ingenuity’s high-resolution data could help the Perseverance rover to identify small hazards that may be in the way of the chosen paths. This would include hazards such as rocks or bumpy paths. In addition, Ingenuity could provide rich textual information useful to predict perception performance. This research considered a three-agent system composed of the Perseverance rover, Mars Ingenuity, and Mars Orbiter. The objective of this research was to provide good localization to the rover by selecting an optimal path that minimizes the localization uncertainty accumulation during the rover’s traverse. Using the proposed iterative copter-rover path planner, this research addressed where to map by Ingenuity and where to drive by the Perseverance rover. Using the map of the Mars 2020 landing site, numerous simulations were conducted to demonstrate the effectiveness of said planner.

Referring to the research done in [19], the damaging of Perseverance rover’s wheels was and are a big concern. Small rocks that remain undetected and not considered by long-range strategic planners, can cause future unforeseen problems. Although these may seem to be small (micro-structures in nature), they can cause critical damage. This is where it is theorized that Ingenuity would be able to send crucial high-resolution data that would help the rover to detect said small hazards in advance. In addition to this, Ingenuity could also provide richer information that could further help the Perseverance rover’s path planning. Due to the lack of Global Positioning Systems (GPS), older methods like Wheel Odometry (WO) and Visual Odometry (VO), would be highly relied on for the positioning of these vehicles. After a few drives, the Perseverance rover’s remote sensing capability is strongly impacted due to localization performance. As a result, perception aware planning is one of the more effective methods to improve this issue. It aims at improving the perception results by actively choosing future measurement targets. In subsequent work done, the performance of VO localization was improved by using the predictive perception technique, with actively chosen timing, as well as the camera direction to obtain an optimal image sequence. This technique is typically approached using Partially Observable Markov Decision (POMDP) or belief-space planning. During this process, the planner will choose the most optimal actions under motion and sensing uncertainty. The performance of the rover could increase over good terrain, which was called localizability. Since the satellites provide a fairly poor-quality map regarding the terrain, the research team [19] conducted a joint-space search over the Perseverance rover’s path and Ingenuity’s perceptive actions, while taking dynamic map updates from Ingenuity.

In related work within this area, [19] researched some of the Multi-Agent Systems, within both Terrestrial and Planetary Exploration. Within Terrestrial Applications, there has been a lot of research done in utilizing an aerial vehicle in conjunction with a ground vehicle. Typically explored in Unmanned Aerial Vehicles (UAVs), studies use the Dijkstra algorithm for real-time motion control. They utilize multiple active sensors to avoid mid-air collisions and most recent research has studied decentralized Visual Simultaneous Localization and Mapping (SLAM) and planning under uncertainty. A big concern here, however, is that UAVs are very resource limited due to computational and battery limitations, amongst others. Therefore, some more research is being conducted about splitting this limitation into additional vehicles, to balance this. In the area of Planetary Exploration or Extra-Terrestrial Exploration, it presents much bigger issues, as Mars is much less accurate when attempting to estimate the vehicle’s location. Some research described in [19], mentions an integrated system for coordinating multi-rover behavior with the overall goal of collecting planetary surface data. Some subsequent research has been conducted that focuses on construct evaluation functions in dynamic, noisy, and communication-limited environments, as well as strides to bridging the gap between high-level mission specifications and low-level navigation and control techniques under the environment uncertainty between the Perseverance rover and Ingenuity. Additionally, there has been research conducted over data sharing between the Perseverance rover and Mars orbiter.

In this theoretical mission, Ingenuity was treated as a scout that would collect data on each Martian Sol, that would be used before the Perseverance rover would plan its daily path that it would traverse. This was based on the limitation presented with the current capabilities provided by NASA directly. In this scenario, Ingenuity could only fly 90 seconds per day and can only traverse at a low speed (around 4 centimeters per second) if there were no obstacles in its path. The first step in this scenario is that Ingenuity is first deployed from the Perseverance rover to the ground, and then it takes off directly from the ground after the rover drives away for a certain distance. This is intended to protect the rover from any potential damage.

This experiment used Pose Belief Propagation, which are essentially the probability distributions over the possible states of the given vehicle. The environment was approximated as a map represented by a regular grid with n cells and the error model was simplified by assuming the error accumulation is independent of local motion types, as well as it being proportional to the distance traveled. Since the localizability map cannot be built prior to being traversed at every location, a belief state is maintained based on remote sensing data, included from satellite measurements. Because of this approach, this states as hence computationally trackable. The results of this experiment in [19] will be further discussed in Chapter IV.

Figure 10. Shows the trade-off between higher and lower altitude [20].

In Figure 10, it shows how the pairing of the Perseverance rover and Ingenuity could work together to map out the Martian terrain. Notice how the higher up Ingenuity is from the ground, the more distance it covers, but there is a loss of resolution. The closer and higher resolution images of the terrain give a much more accurate representation of the landscape, making for a more precise estimate of the optimal path. As can be imagined, Ingenuity could serve as a better image gatherer than that of one of the orbiters, because it is obviously much closer to the ground.

Autonomous Safe Landing Site Detection for a Future Mars Science Helicopter

Due to the nature of technology, we must look at the next generation of some of these vehicles. In an experiment done in [22], the research team at JPL discussed the concept for the next successor of Ingenuity, called Mars Science Helicopter (MSH). This next generation helicopter would learn from Ingenuity and incorporate some enhancements based on the results accumulated from testing Ingenuity’s capabilities on the Martian environment. Mars Science Helicopter would potentially hold a science payload between 1 and 5 kg, with flight range of up to 25 km at up to 100 m altitude, or 8 minutes’ worth of additional hover time for science data acquisitions. The concept of the Mars Science Helicopter would address a specific science need, which will require the capability to autonomously identify and land on safe landing sites. Over the years, there have been many approaches to creating safe landing site detection for autonomous landing.

NASA for instance, originally intended for the moon, developed autonomous landing hazard avoidance for spacecraft landing, which included a Lidar (Light Detection and Ranging) for 3D perception of landing hazards. A different version of this, is currently deployed on Mars during the 2020 mission called the Lander Vision System or LVS for short. The Lander Vision System currently uses a monocular camera to estimate spacecraft position during descent with respect to an on-board map with hazard locations predetermined. This is to diverge the landing trajectory if a landing hazard is present. However, due to the constant constraints of power, size, and weight, it prevents the use of a Lidar on a Mars Rotorcraft, as well as maps with the resolution required to detect landing hazards for said rotorcrafts up front are not available.

In this work, several individual approaches for 3D reconstruction and mapping are considered, including the Maplap open-source framework, which combines several 3D reconstruction and mapping research algorithms, but because these algorithms are computationally too demanding for on-board processing on a small, embedded processor, they could not be applied. This also applies to several component approaches which require a high-end GPU for near real-time execution. Since landing site detection in unknown terrains requires a robust method of on-board 3D perception, the goal is to maximize the science payload on the Mars Science Helicopter. This can be done by coupling the 3D reconstruction approach with the on-board state estimator. This presents two advantages: First, imagery data from the down-facing navigation camera can be reused, and second, outputs from the estimator can directly be used as pose priors for structure-from-motion approach. This in turn, reduces the computational cost of necessary camera pose reconstruction within the structure-from-motion approach compared to conventional vision-only approaches.

Figure 11. Is a 3D reconstruction example. Left: rectified reference image from UAS flight; Right: reconstructed range image (warm colors are closer to the camera) [22].

Figure 6 shows the 3D representation of the experiment in [22] based on the terrain (on the left), with warmer colors (in the right image) being the less safe landing locations. The warm area will typically represent hazardous rocks and terrain. 3D measurements are processed into a multi-resolution elevation map, which doubles the resolution at each consecutive layer as shown in Figure 7.

Figure 12. Reconstructed Map resolutions for different layers. Blue: base layer; Green: consecutive layers store residuals. Colored squares in each layer cover the same ground [22].

The base layer has the lowest resolution and carries the aggregated height estimate for all measurements within the footprint of a cell in this layer, with the higher resolution layers maintaining the difference between the height estimate at the current layer and the aggregated coarser layers. The significance of this approach is that the measurement accuracy of the 3D point cloud is directly tied to the pixel footprint on the ground, since the 3D points were reconstructed from images taken from the terrain and detecting them at the base layer (lowest resolution), would be much more efficient. By following a dynamic level or detail approach, a new measurement with an assigned resolution would only update the map resolution layer for which is assigned. The landing site detector can perform a top-down approach to locate safe landing locations. This is more efficient, because if any of the lower resolution layers have already been flagged, the detection is aborted for that specific landing location.

Figure 13. Map extraction at different resolutions [22].

As shown in Figure 8, the lower to higher resolutions (from left to right). As mentioned in Figure 6, the warmer regions represent the hazardous landing locations. Actual hazardous landing sites depend on the vehicle itself, however typically they consist of slopes, rock size, roughness, and confidence of the 3D map rendering. In the next chapter, this Thesis will discuss some of the results of these different Artificial Intelligence techniques and what can be learned and applied towards the future.

Multi-Objective Reinforcement Learning–based Deep Neural Networks for Cognitive Space Communications

[23] explored future communication subsystems of space exploration missions and how future missions could potentially benefit from software-defined radios (SDRs) controlled by Machine Learning algorithms. The research proposed a novel hybrid radio resource allocation management control algorithm that integrates multi-objective Reinforcement Learning and Deep Learning Artificial Neural Networks. The objective was to efficiently manage communications systems resources by monitoring performance functions with common dependent variables that result in conflicting goals. Additionally, the proposed approach would use virtual environment exploration, which would enable on-line learning by interactions with the environment and restrict poor resource allocation performance.

One communications technology talked about in this research was Software-Defined Radio (SDR), of which is said to be typically used in terrestrial applications. This software is currently deployed onboard the International Space Station and consists of three SDRs operating at S-band and Ka-band. SDRs, however, add complexity to operations, due to the large number of parameters needed to modify link configurations. But they are allowing for research to be down on cognitive space communications. Another discussed technology is a cognitive radio, which possesses environmental awareness across each layer of the environment. It’s capable of autonomously performing perception, learning, and reasoning to optimize resources at the individual node level. In more recent years, Machine Learning has played an important role with wireless communications, attempting to enable full cognition in these radios. Some research done on Machine Learning techniques, with cognitive radios focused on the learning problem. Majority of this focused on terrestrial cognitive radios, space communications resource allocation, as well as spectrum sensing. Attempting to build upon previous research done with a focus mainly on the learning problem, a Reinforcement Learning solution was considered. The problem presented here was that while using Reinforcement Learning, it spends too much time on exploring actions that result in low performance scores. This research also presented a hybrid algorithm which used Reinforcement Learning and an Artificial Neural Network. With this approach, it would allow the radio to predict the effects of multi-dimensional radio parameters on multi-dimensional conflicting performance goals before allowing the radio to any of these parameters over the air. Moreover, less time and resources would be allocated on learning action-performance mapping. The results of this research will be presented in the next section, but the idea presented in here has a promising theoretical approach to tackling the need for autonomy with the Mars and Earth communications.

Results

Looking back at some of the research done in the previous section, this Thesis will present some of the results that may have promising implementation possibilities. The research done in [6] focused on a combination of SuperCam/ChemCam instruments onboard Perseverance/Curiosity rovers respectively and addresses the issue of the operation of robotics instruments on the Mars surface. As the equipment being carried on these missions are becoming much more advanced, as are the requirements for piloting these instruments, needing much more autonomy. While gathering things like Martian terrain samples (i.e., rocks), precise precision becomes crucial, for proper sampling. Moreover, because of the round-trip communication constraints between Mars and Earth, the precision of visual targeting must be done efficiently on board the rover, which has very limited computing hardware. By using Oriented FAST and Rotated Brief (ORB) features, for landmark-based image registration, this research attempted to justify a novel approach to filtering false landmark matches. Which would employ a random forest classifier to automatically reject failed alignments.

3,800 images gathered from the Curiosity rover micro-imager over 1,200-sol operations, and metadata included from the instrument point were used to evaluate the ability of the proposed precision targeting algorithm. The algorithm was implemented using the OpenCV implementation of ORB feature extraction and RANSAC-based homography finding, and a random forest set with 100 trees. They used 16,500 alignments produced by their algorithm, to train the random forest classifiers. This technique used the noisy RMI metadata to identify successful cases when more than 150 landmark pairs made it past the filters. The resulting accuracy of this approach (while using properly consistent lighting conditions, with an image overlap of 33%), was 80% of cases in correctly identifying the target location identified from the first location within the second image. The percentage mentioned, translates to time saved or a new capability for targeting small features on Martian terrain samples. This experiment focused specifically on the SuperCam/ChemCam, yet the approach could still be applied to some of the other instruments onboard, such as the Planetary Instrument for X-Ray Lithochemistry (PIXL) and the Scanning Habitable Environments with Raman and Luminescence (SHERLOC), as mentioned in Chapter II. This approach has the potential to help future missions become more autonomous, by allowing the rover’s onboard instruments to make better cross-reference image precision, allowing for sampling and potential path traversing.

The experiments done [19], as mentioned in Chapter II, show two promising heuristics from both the handwritten side (using Sobel Operators and Convolution) as well as from the machine-learned model. In their experiment, they built a Monte Carlo simulation environment for testing of ENav and Mars surface navigation, with the Robotics Operating System (ROS), for inter-process communication. One ROS node wraps ENav, while an additional node wraps the HyperDrive simulator. This simulator mimics the rover’s motion, terrain slipping and settling, and disparity images from rover missions, with artificial terrains, including elevations and rock density being loaded into HDSim. When the simulation is run, the rover’s ENav would start from the initial point (one side of the map) with an 80m goal distance.

This simulation would be considered either successful if the goal was reached or fail if an optimal path wasn’t found, the time limit was exceeded, or safety volitation occurred. When running the simulation with the Machine Learning model, an additional ROS node was used running TensorFlow. This model also received the height map mentioned earlier, as well as publishing ACE estimates. Due to some system limitations between HDSim (running on 32-bit systems) and TensorFlow (running on 64-bit systems), a ROS multi-master system was needed to communicate between computers. The experimentation yielded potential gains in computation time, on the notion that each cell of the ACE algorithm takes 10 to 20 milliseconds on the RAD750 flight processor, which takes up the majority of ENav’s computation time.

The first experiment tested if by adding the hand-designed and machine-learned heuristics to the baseline ENav software, would show any improvements in the tracked performance metrics. Some simulations were additionally run with a Monte Carlo approach with the same artificial terrains and parameters as the baseline ENav software. The experiment with the Gradient Convolution heuristic yielded mixed results. From the less complex simulations, these were relatively comparable to the baseline software; however, the success rate dropped slightly, but the overthink rate decreased. With the more complex simulations, the success rate dropped a lot more (69.9% to 67.1%), but other metrics improved (i.e., path traverse inefficiency decreased from 25.4% to 19.9%). The hand-designed heuristic did show potential, but in the more complex simulations.

The machine-learned heuristic, however, showed much more potential, across the less-complex to more-complex. Success rate improved from 69.9% to 72.5%, while path traverse inefficiency decreased from 25.4% to 20.4%. The number of ACE evaluations compared to the baseline software (regarding overthink) decreased from 20.0% to 7.1%. This decrease would improve the performance of the rover’s frequented stops, having to stop less often before finding the next optimal path. Additionally, the research done here proves that the safety of the ACE algorithm would not be violated/impacted by adding a Machine Learning approached heuristic. Additional simulations were done with some other approaches that also yielded the same results which can be found [19], that also proves that rover safety constraints won’t be violated using added heuristics, however the machine-learned was the out performer. This does show how machine learned approaches can offer better performance and efficiency.

Next, we can look at the results from [20], where the research being conducted was considering a three-agent system composed of the Perseverance rover, Ingenuity, and the Mars Orbiter, in which the objective was to provide good localization to the Perseverance rover by selecting an optimal path that would minimize the localization uncertainty accumulation during the rover’s traversing stage. However, the results are that of a two-agent system between the Perseverance rover and Ingenuity.

Figure 14. Shows and represents the Mars terrain for a given location, taking by The High-Resolution Imagining Experiment (HiRISE) [20].

Figure 15. Represents the where-to-map and iterative Perseverance rover path result between the Ingenuity’s high-resolution and low-resolution cameras [20].

Using figure 9 and 10, and assuming that the lower left corner is considered coordinate (0, 0, 0), the Perseverance rover is set to coordinate (20, 20), with a goal of (140, 140), and Ingenuity being set at (30, 70, 2) with a goal of (120, 140, 2). Figure 10 shows the different potential paths that the Perseverance rover and Ingenuity could/would take based on different terrain assumptions. N in the figure represents the number of images Ingenuity can take and the amount of uncertainty reduction given the measurement altitude is greatly influenced by the resolution of the camera. The solid blue line represents the initial rover path, while the dashed orange line represents the updated rover using an iterative planner. The solid red line shows Ingenuity’s path, while the red point presents the position, and the red square represents Ingenuity’s camera’s field of view.

Table 1. High-Resolution Updates based on results from [20].

Table 2. Low-Resolution Updates based on results from [20].

Table 3. Reduction Rates in Monte Carlo Simulation Result [20].

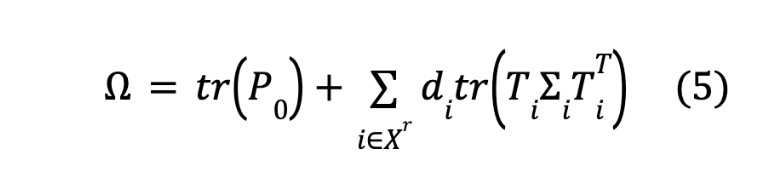

The observations points listed in both Table 1 (high-resolution camera) and 2 (low-resolution camera), represents the location of which Ingenuity will capture images of the Martian terrain, as well as the reduction rates of the Perseverance rover’s pose hyper-belief shown in Equation 5. The results show the reduction in the Perseverance rover’s worst case odometry error uncertainty. Note, that the rover’s uncertainty begins to decrease with the more images that Ingenuity captures.

Figure 16. Monte Carlo Simulation results with different initial starting points for the Perseverance rover [20].

Figure 11, shows a variation of their experiment, represented in a Monte Carlo approach, using both the rover and Ingenuity to plan the routes. These simulations were compared to their algorithm with the baseline random mapping approach. With that approach, Ingenuity randomly selects a starting point on the Perseverance rover’s path. From these simulations, they obtained a 10 to 20% gain in uncertainty reduction, compared to the baseline random mapping approach where it only gave less than a 10% gain. This showed that by using the limited resources of Ingenuity, it could help in reducing the Perseverance rover’s localization error. This research also showed the trade-off of using Ingenuity’s high-resolution versus low-resolution cameras, to make Mar’s terrain planning more precise, representing a where-to-map problem. More research will be done in this area, as there is more regarding when-to-map considerations.

In the research done in [22] for autonomous safe landing site detection, their simulations were run using the Mars Victoria Crater. Using the JPL DARTS simulator to validate the 3D renders of the Martian Terrain.

Figure 17. Represents the simulated flight environment [22]

(a): Simulated UAS over Mars Vitoria

(b): Current navigation camera image with overlaid tracked features

(c): Current reference point

(d): Past view

(e): Height map generated from stereo disparity map

(f): Aggregated elevation map

(g): Landing site map

Figure 17 shows a simulated flight using DART that passes over the crater rim, using a 20 m altitude above the terrain. The green areas are considered safe landing sites, while the red is considered not safe. The state estimator is used for the tracked features. This shows that the landing sites are detected on flat terrain outside the crater, but not on the terrain that’s below the rim which violates the slope constraint. To test the ability of the landing site detector to detect the smaller landing hazards, they placed randomly sized rocks on a flat ground plane, which was reconstructed in 3D. This was simulated by producing a point cloud for a simulated camera at each individual camera position directly from the terrain. The point clouds were then overlaid with Gaussian noise corresponding to a 0.25-pixel disparity error for a fixed baseline between cameras of 4.8 m at a flight altitude between 5 m and 6 m. Figure 18 (a), below, shows the 3D constructed representation of the randomly sized and placed rocks located on the flat terrain. Figure 13 (b) shows the detection results for the different rock sizes. For these simulations, a Monte Carlo evaluation was performed using a randomly generated terrain for each test flight.

Figure 18. (a): The 3D constructed map from the randomly sized and placed rocks on a flight plane (green being safe and red being hazardous); (b): Rock detection rates using random rock distribution [22].

During the tests, if all rocks were detected that were represented on the 3D reconstruction, then it would yield 100% accuracy. The accuracy of rock detection appeared to perform better when attempting to detect larger rocks than that of the smaller ones. For these experiments, they leveraged the currently existing sensor data and data processing products to minimize impact on size, weight, and power. This was tested on multiple simulated and real environments, which demonstrated the viability of a vision-based perception approach for safe landing site detection. The landing site detection navigation module is in the process of implementation on-board the Mars Science Helicopter. The team plans to continue the research once the Mars Science Helicopter is ready for UAS flights.

Expanding on the research done in [23] the proposed hybrid method consisted of a Reinforcement Learning and standard multilayer Artificial Neural Network. It considered a multi-dimensional parameter optimization of radio configurations, which attempts to achieve the best multi-objective performance possible given the current satellite communication channel conditions. Using Virtual Exploration, the Artificial Neural Network allows the Reinforcement Learning agent to eliminate the time spent on exploring actions that are predicted to yield poor performance, called Action Rejection, which improves the exploration performance. A feedforward with Levenberg-Marquardt backpropagation training algorithm was used, with three fully connected layers. These layers consisted of two hidden layers that contained 7 and 50 neurons each, which used a log-sigmoid transfer function and the output layer with one neuron using the standard linear transfer function. At the training stage, the data was split randomly into 70% for training, 15% for testing, and 15% for validation. The introduction of the Artificial Neural Network for virtual exploration allowed the radio to drastically decrease the time spent and the number of packets exploring actions that resulted in poor performance when compared to the maximum performance achieved while rejecting all those actions predicted to perform below a threshold.

Table 4. Average Number of Packets with Multi-Objective Performance Above 0.56 [23]

Table 4 presents the differences between when the Virtual Exploration feature is disabled versus when it is enabled. From these results, we can see an improvement on the number of packets experiencing performance values above 0.56 of 2.48 times and 3.92 times for fixed and variable exploration probabilities. In this research, the goal was to provide control over which actions to be explored based on their predicted multi-object performance to control the time spent on exploring actions with performance values above a threshold defined by the user. This research concluded an overall improvement of 1.32 times and 1.42 times on the integral values of the average performance distribution. The result from this research has the potential to allow faster, more autonomous communication between either the Perseverance mission technologies or Mars/Earth Communications.

Final Note on Communications

One challenge with space communication is that it is very noisy [25]. For example, when the DSN antennas (mentioned earlier) attempts to communicate with space crafts, the signal is very weak, and the signal gets weaker the further away from the earth it is. Since binary is being received, it not only collects it from the craft, but space as well. Another challenge is that the earth spins, leaving only 8 hours of contact time. Moreover, each of the 3 antennas located around the world must pick up where the other left off, meaning slight interruptions can happen. Another big and important challenge is the complicated process of requesting time to use an antenna mentioned earlier. These are just some of the challenges that can make communications between Mars vehicles and Earth very difficult.

Conclusion and Recommendations

This Thesis was a survey conducting research on how applying various Artificial Intelligence techniques to planetary rover technologies, has the potential to allow for more autonomy. This in turn, could help to bridge the gap between Mars and Earth communication delays, thus allowing planetary rovers, such as the Perseverance rover the chance to operate without the need of constant human intervention. This Thesis presented different areas of research with the goal to identify specific Artificial Intelligence approaches that are currently being used on NASA’s Perseverance rover and Ingenuity and one’s that can one day be implemented. This encompassed some Machine Learning, Reinforcement Learning, Deep Learning, and Artificial Neural Networks. This covered how communications with space vehicles and Earth are currently being done, and current technologies that have been deployed. Mars and Earth experience major communication delays due to the sheer distance, and to attempt to bridge that gap, it is important to give the Perseverance rover and Ingenuity the capabilities to be self-sufficient and to make their own assessments of the Mars terrain. To get a better understanding as to what advancements have been successful, this thesis looked at some of NASA’s future planned missions to Mars. This Thesis explored some of the techniques that worked well and how this has improved the capabilities of less-needed human intervention. We conclude with notes about how this field is counting to grow and more research needs to be done and eventually applied to yield future successful missions. The importance of this research is to help identify what techniques are being properly executed and what can be implemented to improve the results in this area. The research conducted in these areas has the potential to help in answering the question, “Are we alone in the Universe?”

REFERENCES

Arzo, Sisay Tadesse, Dimitrios Sikeridis, Michael Devetsikiotis, Fabrizio Granelli, Rafael Fierro, Mona Esmaeili, and Zeinab Akhavan. "Essential Technologies and Concepts for Massive Space Exploration: Challenges and Opportunities." IEEE Transactions on Aerospace and Electronic Systems (2022). https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=9761732

Maki, J. N., D. Gruel, C. McKinney, M. A. Ravine, M. Morales, D. Lee, R. Willson, et al. “The Mars 2020 Engineering Cameras and Microphone on the Perseverance Rover: A Next-Generation Imaging System for Mars Exploration.” Space Science Reviews 216, no. 8 (2020). https://link.springer.com/content/pdf/10.1007/s11214-020-00765-9.pdf

Mars.nasa.gov. "Communications with Earth | Mission – NASA Mars Exploration." NASA Mars Exploration. https://mars.nasa.gov/msl/mission/communications/

mars.nasa.gov. "Mission Overview." NASA Mars Exploration. https://mars.nasa.gov/mars2020/mission/overview/

"Mars 2020/Perseverance." NASA Mars Exploration. Accessed July 24, 2022. https://mars.nasa.gov/files/mars2020/Mars2020_Fact_Sheet.pdf

Doran, Gary, David R. Thompson, and Tara Estlin. "Precision instrument targeting via image registration for the Mars 2020 rover." (2016). https://trs.jpl.nasa.gov/bitstream/handle/2014/46101/CL%2316-1757.pdf?sequence=1&isAllowed=y

"Mars 2020 Perseverance Launch Press Kit | Science." NASA/JPL. https://www.jpl.nasa.gov/news/press_kits/mars_2020/launch/mission/

mars.nasa.gov. "Mastcam-Z." NASA Mars Exploration. https://mars.nasa.gov/mars2020/spacecraft/instruments/mastcam-z/

Wiens, Roger. "SuperCam." NASA Mars Exploration. https://mars.nasa.gov/mars2020/spacecraft/instruments/supercam/

Allwood, Abigail. "PIXL." NASA Mars Exploration. https://mars.nasa.gov/mars2020/spacecraft/instruments/pixl/

Beegle, Luther. "Scanning Habitable Environments with Raman & Luminescence for Organics & Chemicals (SHERLOC)." NASA Mars Exploration. https://mars.nasa.gov/mars2020/spacecraft/instruments/sherloc/

Hecht, Michael. "MOXIE." NASA Mars Exploration.https://mars.nasa.gov/mars2020/spacecraft/instruments/moxie/

Rodriguez Manfredi, Jose A. "Mars Environmental Dynamics Analyzer (MEDA)." NASA Mars Exploration. https://mars.nasa.gov/mars2020/spacecraft/instruments/meda/

Hamran, Svein-Erik. "Radar Imager for Mars' Subsurface Exploration (RIMFAX)." NASA Mars Exploration. https://mars.nasa.gov/mars2020/spacecraft/instruments/rimfax/

NASA/JPL-Caltech. "Mars 2020 Perseverance Rover Sample Caching System – NASA Mars Exploration." NASA Mars Exploration. https://mars.nasa.gov/resources/25005/mars-2020-perseverance-rover-sample-caching-system/

NASA/JPL-Caltech. "Sample Tube in Perseverance's Coring Drill – NASA Mars Exploration." NASA Mars Exploration. https://mars.nasa.gov/resources/26117/sample-tube-in-perseverances-coring-drill/

NASA/JPL-Caltech. "Perseverance's Laser Retroreflector (Illustration) – NASA Mars Exploration." NASA Mars Exploration. https://mars.nasa.gov/resources/25273/perseverances-laser-retroreflector-illustration/

"Sobel Operator." Wikipedia, the Free Encyclopedia, Wikimedia Foundation, Inc, 19 Feb. 2004, en.wikipedia.org/wiki/Sobel_operator. Accessed 12 June 2022.

Abcouwer, Neil, Shreyansh Daftry, Tyler del Sesto, Olivier Toupet, Masahiro Ono, Siddarth Venkatraman, Ravi Lanka, Jialin Song, and Yisong Yue. "Machine learning based path planning for improved rover navigation." In 2021 IEEE Aerospace Conference (50100), pp. 1-9. IEEE, 2021. https://ieeexplore.ieee.org/search/searchresult.jsp?newsearch=true&queryText=Machine%20learning%20based%20path%20planning%20for%20improved%20rover%20navigation

Sasaki, Takahiro, Kyohei Otsu, Rohan Thakker, Sofie Haesaert, and Ali-akbar Agha-mohammadi. "Where to map? Iterative rover-copter path planning for mars exploration." IEEE Robotics and Automation Letters 5, no. 2 (2020): 2123-2130. https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=8976104

Mars.nasa.gov. "The Red Planet." NASA Mars Exploration. https://mars.nasa.gov/

Brockers, Roland, Jeff Delaune, Pedro Proença, Pascal Schoppmann, Matthias Domnik, Gerik Kubiak, and Theodore Tzanetos. "Autonomous safe landing site detection for a future mars science helicopter." In 2021 IEEE Aerospace Conference (50100), pp. 1-8. IEEE, 2021. https://ieeexplore.ieee.org/abstract/document/9438289

Ferreira, Paulo Victor R., Randy Paffenroth, Alexander M. Wyglinski, Timothy M. Hackett, Sven G. Bilén, Richard C. Reinhart, and Dale J. Mortensen. "Multi-objective reinforcement learning-based deep neural networks for cognitive space communications." In 2017 Cognitive Communications for Aerospace Applications Workshop (CCAA), pp. 1-8. IEEE, 2017. https://ieeexplore.ieee.org/document/8001880

"Mars Helicopter/Ingenuity." NASA Mars Exploration. https://mars.nasa.gov/files/mars2020/MarsHelicopterIngenuity_FactSheet.pdf

"Challenges of Getting to Mars: Telecommunications – NASA Mars Exploration." NASA Mars Exploration. https://mars.nasa.gov/resources/20180/challenges-of-getting-to-mars-telecommunications/

![Figure 1: NASA's Deep Space Network (DSN) [3].](https://images.squarespace-cdn.com/content/v1/659f5eec7e94cc7c76b91bdc/37d3045e-c27a-4f98-9342-198f443756f5/NASA-DSN.jpg)

![Figure 2: Mars Perseverance rover [7].](https://images.squarespace-cdn.com/content/v1/659f5eec7e94cc7c76b91bdc/4ec46350-c793-45d7-9fbf-74d1b6fddea1/Mars-Perseverance-Rover.jpg)

![Figure 3: Mars Perseverance On board Technologies [8][9][10][11][12][13][14].](https://images.squarespace-cdn.com/content/v1/659f5eec7e94cc7c76b91bdc/f326b175-96f2-409d-b980-67809f806282/Mars-Perseverance-Rover-Tech.png)

![Figure 4: Height Map (Top Left), Gradient Map (Top Right), Rover Kernel (Bottom Left), Cost Map (Bottom Right) [19].](https://images.squarespace-cdn.com/content/v1/659f5eec7e94cc7c76b91bdc/152795ab-0b65-4ac6-b2f4-a0fa84d76f6e/Maps.png)

![Figure 5: A view of the ENav simulation environment [19].](https://images.squarespace-cdn.com/content/v1/659f5eec7e94cc7c76b91bdc/b5423526-31fc-416b-9924-36c2c6c8ffdc/ENav-Map.png)

![Figure 10: Shows the trade-off between higher and lower altitude [20].](https://images.squarespace-cdn.com/content/v1/659f5eec7e94cc7c76b91bdc/47488a90-a2e6-4ed4-abc5-0be437065f3b/Rover-Ingenuity-Trade-Off.png)

![Figure 11: Is a 3D reconstruction example. Left: rectified reference image from UAS flight; Right: reconstructed range image (warm colors are closer to the camera) [22].](https://images.squarespace-cdn.com/content/v1/659f5eec7e94cc7c76b91bdc/28969ab5-51fd-4ba0-a68c-08c50b847ac3/Terrain-Reconstruction.png)

![Figure 12: Reconstructed Map resolutions for different layers. Blue: base layer; Green: consecutive layers store residuals. Colored squares in each layer cover the same ground [22].](https://images.squarespace-cdn.com/content/v1/659f5eec7e94cc7c76b91bdc/d3db30e2-09ab-49a5-b398-283a45088e10/Resolution_Layers.png)

![Figure 13: Map extraction at different resolutions [22].](https://images.squarespace-cdn.com/content/v1/659f5eec7e94cc7c76b91bdc/1a3219c6-6bf7-4796-85c3-5bb0e56b12a7/Resolution-Reconstruction.png)

![Figure 14: Shows and represents the Mars terrain for a given location, taking by The High-Resolution Imagining Experiment (HiRISE) [20].](https://images.squarespace-cdn.com/content/v1/659f5eec7e94cc7c76b91bdc/829c1d94-9f07-45f3-b096-ab27fedca7f4/HiRISE-Images.png)

![Figure 15: Represents the where-to-map and iterative Perseverance rover path result between the Ingenuity’s high-resolution and low-resolution cameras [20].](https://images.squarespace-cdn.com/content/v1/659f5eec7e94cc7c76b91bdc/094e2acd-5768-40c6-9c79-a8ae3d258e18/Path-Planning-Map.png)

![Table 1: High-Resolution Updates based on results from [20].](https://images.squarespace-cdn.com/content/v1/659f5eec7e94cc7c76b91bdc/85305b21-5d09-401a-92f0-04e639ec68e1/High-Resolution+Updates)

![Table 2: Low-Resolution Updates based on results from [20].](https://images.squarespace-cdn.com/content/v1/659f5eec7e94cc7c76b91bdc/5d8b7ecb-23c3-489f-9125-0405be9a4524/Low-Resolution+Updates)

![Table 3: Reduction Rates in Monte Carlo Simulation Result [20].](https://images.squarespace-cdn.com/content/v1/659f5eec7e94cc7c76b91bdc/12d8ae89-4491-4873-a283-c9852f88302d/Reduction+Rates+in+Monte+Carlo+Simulation+Result)

![Figure 16: Monte Carlo Simulation results with different initial starting points for the Perseverance rover [20].](https://images.squarespace-cdn.com/content/v1/659f5eec7e94cc7c76b91bdc/3733ec52-60ef-45c0-bdfd-ef279a18220e/Terrain-Planning-Map.png)

![Figure 17: Represents the simulated flight environment [22]](https://images.squarespace-cdn.com/content/v1/659f5eec7e94cc7c76b91bdc/50386f0f-1512-4d85-97f9-6376ccba6b8d/Simulated-Flight-Enviornments.png)

![Figure 18: (a): The 3D constructed map from the randomly sized and placed rocks on a flight plane (green being safe and red being hazardous); (b): Rock detection rates using random rock distribution [22].](https://images.squarespace-cdn.com/content/v1/659f5eec7e94cc7c76b91bdc/09116ecd-40f7-4db6-b46f-0bd352c56943/Random-Sized-Rock-Terrain.png)

![Table 4: Average Number of Packets with Multi-Objective Performance Above 0.56 [23]](https://images.squarespace-cdn.com/content/v1/659f5eec7e94cc7c76b91bdc/e65ece23-7e58-4563-9586-c700ebd15358/Average+Number+of+Packets)